Note: This article was originally published on the IDTechEx subscription platform. It is reprinted here with the permission of IDTechEx – the full profile including SWOT analysis and the IDTechEx index is available as part of their Market Intelligence Subscription.

History

Ambarella is headquartered in Santa Clara, California, US, and was founded in 2004. It first began with systems- on-chip (SoCs) for HD broadcast encoders, followed quickly by video processing SoCs for digital camcorders, with Ambarella providing the chip that enabled the first Full HD camcorder. In the consumer camera market, power consumption was a critical factor, balancing the performance required for image processing with the relatively small capacity batteries found in camcorders of the time and delivering usable and acceptable battery lives. Along with low power consumption, Ambarella identified early on that artificial intelligence technologies would become a significant part of image processing in the future. As its products in image processing developed, and separately the automotive market began incorporating more cameras into vehicles, Ambarella diversified and began selling into the automotive market as well. Today it has two main target markets: AI processing in automotive markets and in artificial intelligence of things (AIoT), which comprises a wide range of non-automotive markets, including professional security and body- worn cameras, industrial robotics, and consumer products such as home-security and sports cameras, as well as video conferencing and consumer robotics.

Technology

Automotive chip technologies

Ambarella’s point of entry into the automotive market was in video recording, which was strongly in line with its existing competencies. Ambarella’s chips were first used in black-box style video recording, a very common feature found on vehicles in the Chinese and Japanese markets. These early SoCs were not intended for advanced driver-assistance system (ADAS) applications, only image capture for applications like dash-cam recording, and digital wing mirrors. When ADAS features like automatic emergency braking and lane keep assist became more common in vehicles Ambarella produced a new product with perception capabilities that could enable these features.

The CVflow line of AI vision SoCs was launched in 2017, after a period of intense internal development and the incorporation of IP from the acquisition of VisLab in 2015, a key computer vision start-up from Italy. A spinout from the automotive R&D labs at the University of Parma, VisLab was previously working on autonomous driving (AD) technologies using camera-based systems, beginning in 1998. Its design required a lot of computer processing and VisLab used a total of 18 computers mounted in the car to run the autonomous driving software it developed. After the acquisition from Ambarella, and leveraging Ambarella’s image processing know-how, the autonomous controller was shrunk from 18 computers in the trunk, back in 1998, to a slim briefcase-sized controller today using a single Ambarella CV3-AD AI domain controller SoC to do all of the vehicle’s perception, fusion and path planning, running Ambarella’s full AD software stack, and leaving plenty of processing headroom for other functions. The CVflow line of products, starting with CV1, added perception capabilities so that key features like lane markings and pedestrians could be identified digitally and used in ADAS applications. CV1 was launched in 2017 and was followed by CV2 in 2018. Now Ambarella is on CV3-AD, first launched in 2022.

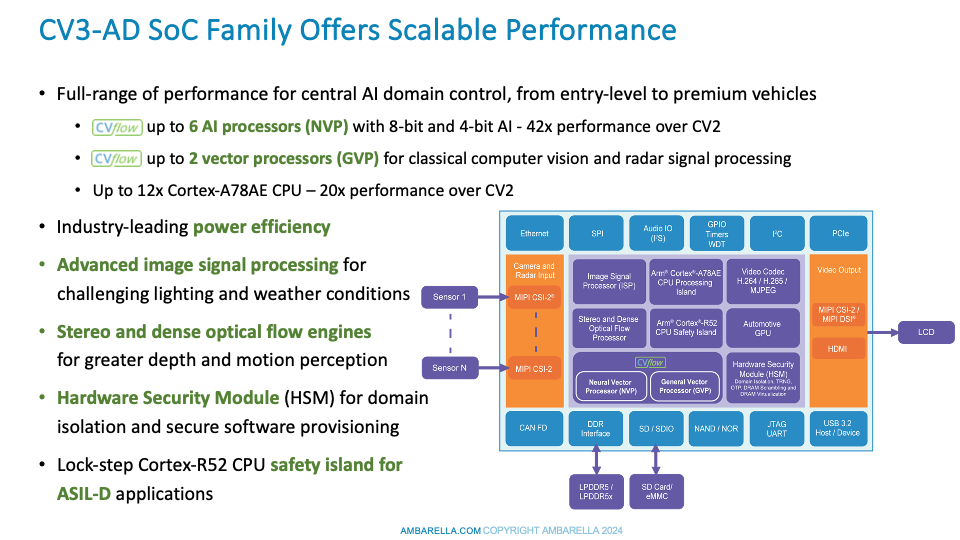

Ambarella has several variations of the CV3-AD chip, and began with the most powerful CV3-High, which is aimed at high-performance, SAE L4 AD applications. The entire CV3-AD family is manufactured using a cutting-edge 5nm node process technology, one of the most advanced that IDTechEx has seen in automotive applications. This offers plenty of computational performance and efficiency. The small node size also allows Ambarella to pack a wider variety of processor types into one SoC. It has been common for ADAS SoCs to include both CPU and GPU cores, but more recently NPUs (neural processing units) have become common in ADAS SoCs as well. This newer processor type is optimized for computation related to AI workloads, such as computing neural networks which typically require many parallel threads to complete quickly. Variations of neural networks and other AI technologies are commonly used in ADAS SoCs for tasks such as image classification, trajectory tracking, trajectory prediction, and more. The CV3-AD chips use an NPU, and although Ambarella has used the terminology neural vector processor (NVP), IDTechEx understands it to have similar functionality and purpose as an NPU.

The CV3-High was launched in 2022 and was targeted at the premium end of the automotive market. It was followed by the CV3-AD685 in early 2023, and more recently the CV3-AD655 and CV3-AD635 in early 2024, each with reduced performance and reduced functionality. The CV3-AD685 targets SAE L3/4 applications while the entry-level CV3-AD635 is aimed at mainstream SAE L2+ vehicles. Additionally, the family includes the CV72AQ, which is aimed at the Chinese market where ASIL functional-safety isn’t required in the SoC, and where 5-camera automated parking systems are commonplace, a feature that is considered premium in the US and European markets. The full family of products and performance differences are summarised in the graphic below.

Ambarella in-house autonomous driving solution

The CV3-AD SoC family has been adopted by tier-one suppliers, such as Continental and Bosch, to include in their ADAS products. In addition to this, Ambarella has been developing its own autonomous driving software stack, as well as an evolving fleet of R&D vehicles to collect data and provide customers with a proof-of-concept for its technologies. The latest members of this R&D fleet each have a sensing suite of 18 cameras and five radars along with a single CV3-High SoC to deliver Level 4 driving capabilities. Cameras are readily available to the automotive market, with smartphones driving image sensor technology with so much resolution that, until recently, automotive SoCs have not had the processing power to cope with all the data they make; nor have automotive systems really needed that level of image resolution for their computer vision systems (Currently, the highest resolution for automotive forward-facing, long-range cameras is 8MP). However, high-performance radar is something that the automotive market has been lacking, with many start- ups emerging to address the technology void. Oculii™ was one of those start-ups, and it was acquired by Ambarella in 2021.

Now known as Ambarella’s Oculii AI radar technology, it takes a different approach from most others working in next-generation 4D imaging radars. For many of the leading new radar products, the approach to improving performance has been to add more channels to the radar, like adding pixels to a camera. Radars are made up of transmitting and receiving channels, the product of which is known as virtual antennas (VAs). Leading tier-one radars have 192VAs. Newcomers like Arbe and Mobileye are making radars with more than 2,000 VAs. However, there are limitations to putting more channels on the radar. Like with pixels in a camera, more channels require more processing power which typically results in a lower frame rate and increased power consumption. More channels also require a larger antenna array, which leads to a larger overall package. Oculii technology uses 12VAs, in a simple 3Tx (transmitting channel) and 4Rx (receiving channel) arrangement, typical of radars used on vehicles throughout the late 2010s, and also very cheap to make. Oculii then uses AI algorithms to alter the operation of the radar on the fly, adapting it to the environment. With comparably little data coming from the few channels, and central processing on Ambarella’s powerful SoCs, the radar can run much faster than a 2,000VA radar. Each “radar head” (a term Ambarella uses to indicate that no processing takes place inside the edge module) generates point clouds with 10,000 points per frame, with an angular resolution of 0.5° in the azimuth and elevation directions and a range of 500+m (beyond 100m for pedestrian detection).

One of the key features of Ambarella’s autonomous driving system is the use of central computing of all cameras and radars. This has become a common avenue of exploration for many involved in autonomous driving development, though centralized radar processing and fusion with camera data is impractical with other 4D imaging technologies. Central computing offers much more computational power compared to trying to complete lots of radar data processing on a microcontroller unit (MCU) within the sensor. It can also help with sensor fusion as it is easier to fuse the data earlier in the data processing pipeline. However, one of the issues with this architecture type is that OEMs would be forced to have the complete system or nothing. IDTechEx put this to Ambarella, which said that it can offer the radars as standalone units for OEMs to integrate into their own system, gaining the advantage of Oculii’s radar technology without committing to Ambarella’s complete system.

While Ambarella is perusing a light, 12 virtual antenna set up with a sparse antenna array, IDTechEx was also intrigued as to what it could do with modern 192 virtual antenna hardware and a larger form factor. Ambarella reported that it had previously made a much larger demonstrator radar head to show what it could do. The result was a radar that could return an angular resolution of 0.1°, making it one of the highest-resolution radars that IDTechEx has seen. However, this radar would be impractically large for cars. Ambarella also reported that there is little extra benefit in dropping below 0.5° in autonomous cars. Despite this, a radar this size and with this much resolution could be useful in autonomous trucking.

Security cameras and video conferencing

While visiting Ambarella, IDTechEx also saw its current work in security camera and consumer camera systems (video doorbells, action cameras, etc.). One of the biggest developments in this space is the emergence of multimodal generative AI. ChatGPT introduced the world to large language models and their ability to replicate human-like conversation. Large multimodal models go one step further by incorporating new input and output media, beyond text-only interactions. In a security camera system, multimodal LLMs and lighter weight vision language models (VLMs) can provide contextual awareness and a natural-language interface for all the video feeds it has recorded, meaning a user could ask the system “Has anything suspicious happened?” and the AI would play a recording of suspicious things that it has seen. These applications are possible thanks to Ambarella’s edge AI capabilities in its SoCs, which provide a very high level of AI performance per watt, due to its extremely efficient CVflow AI architecture, which is now in its third generation since Ambarella’s first family of vision AI SoCs were launched in 2017.

This generative AI functionality is also supported on many of Ambarella’s automotive SoCs, and the company expects GenAI to also become a trend in the automotive market (building on the transformer neural networks that are already being deployed on its CV3-AD SoCs).

Business model and market

Ambarella has two markets that it focuses on: the broad and multifaceted AIoT camera market and automotive. Within the AIoT camera products market, IDTechEx believes Ambarella has strong partnerships with ODMs that customers can tap into at the manufacturing stage, while Ambarella works closely with customers during the design stage, sometimes in conjunction with design houses, and more frequently to do sodirectly; essentially acting as a tier-one in offering its chips and comprehensive software tools as a solution for AI and image processing on professional security and robotics devices, as well as consumer devices like action cameras, doorbells, home security systems, video conference equipment, and more. Here customers will be interested in power efficiency and the ability to provide high-performance image processing functions such as image stabilization in action cameras. Increasingly, AI has also become a key feature and marketing term for its customers in the consumer product space, while becoming a standard and growing set of features in professional AIoT products.

Within the automotive market, Ambarella is a tier-two supplier. Its customers are the likes of Bosch, Continental, and other tier- ones; though it also works directly with OEMs in some cases. They buy Ambarella’s chip to be used in their ADAS products, such as lane keep assist systems, automatic emergency braking, automated parking features, and camera-based visualization, like electronic mirror systems and 360° parking camera systems. Ambarella’s autonomous driving solutions, with a full AD software stack, Oculii radar software, and central computing, are more in line with a tier-one product, however IDTechEx expects that OEMs would purchase this product either directly from Ambarella or in partnership with the OEM’s favored tier- one who could handle product production and supply. Leading tier-ones, such as Continental and Bosch, already have their own radar products, so it could be difficult for Ambarella to sell a complete AD system to a tier-one as they would prefer to sell their own sensors.